The Grimoire: Tome of Cursed Knowledge

This is the place where I collect all the knowledge earned during my self taught computer science journey, a grimoire that freely, available to be consulted at any time.

Rather than leaving my notes scattered across random bookmarks, Discord servers, notebooks and Google Docs, this grimoire serves as a single source of truth for all the things that I'm learning during in this tedious, painful path.

If you're reading this, you're either past-me, future-me, or an unfortunate soul who has stumbled upon my secret knowledge. Either way, remember:

-

🛠️ Debugging is an art, not a science.

-

💀 Despite accumulating knowledge, there will still always be a moment where you'll be thrown into the unknown, left to figure it out. That's just how things are.

Setting Up SSH for GitHub

This will make GitHub stop asking you for your username and access token every time.

Step 1: Check if you already have a key

- Before generating a new one, check if you already have an SSH key:

ls -al ~/.ssh

If you see files like id_rsa and id_rsa.pub (or id_ed25519 and id_ed25519.pub), you probably already have an SSH key. If not, generate one.

Step 2: Generate a New SSH key (if needed)

If you don't have an existing SSH key, generate a new one:

ssh-keygen -t ed25519 -C "yourmail@example.com"

- When it asks for a file location, just press Enter (this will save it in

~/.ssh/id_ed25519). - When it asks for a passphrase, you can leave it empty (or set one for extra security).

Step 3: Add Your SSH Key to the SSH Agent

- Now, you need to add the key to your local SSH agent so it gets used automatically:

eval "$(ssh-agent -s)"

- Then add your key:

ssh-add ~/.ssh/id_ed25519

(If you used rsa, replace id_ed25519 with id_rsa.)

Step 4: Copy Your SSH Key to GitHub

Now, you need to add your SSH key to your GitHub account.

- Copy the key to your clipboard:

cat ~/.ssh/id_ed25519.pub

It will output something like:

ssh-ed25519 AAAAC3Nza...yourlongpublickeyhere yourmail@example.com

- Go to GitHub → SSH Keys Settings

- Click "New SSH Key", paste your key, and give it a name.

- Save it.

Step 5: Test the Connection

- Check if GitHub recognizes your SSH key:

ssh -T git@github.com

If everything is set up correctly, you should see:

Hi <your-github-username>! You've successfully authenticated, but GitHub does not provide shell access.

Step 6: Change Your Git Remote to Use SSH

- If your Git remote is still using HTTPS (which asks for a password), switch it to SSH:

git remote -v

If you see:

origin https://github.com/your-username/repository.git (fetch)

origin https://github.com/your-username/repository.git (push)

- Change it to SSH:

git remote set-url origin git@github.com:your-username/repository.git

Now, every push/pull will use SSH, and you’ll never have to enter your password again.

The Skinny Ruby Queen: Minimal Dockerfile for Production

1. Build Stage

- We're building in style. Ruby + Alpine = skinny legend

FROM ruby:3.3-alpine AS build

- Install a full dev toolchain to compile native gems (yes, Ruby still lives in C land)

RUN apk add --no-cache build-base

- Set the working directory—aka the sacred ground where it all happens

WORKDIR /usr/src/app

- Copy only Gemfile and lockfile first (layer caching magic)

COPY Gemfile Gemfile.lock ./

- Configure bundler to install gems locally under vendor/bundle. This will be copied over to the final image later like a blessed artifact

RUN bundle config set --local path 'vendor/bundle' \

&& bundle install

- Copy the rest of your application—code, chaos, and all

COPY . .

2. Final Stage

- A clean Alpine base with Ruby and none of that build baggage. We like our containers light.

FROM ruby:3.3-alpine

- Set the working dir again (yes, you need to re-declare it—Docker has no memory of its past life)

WORKDIR /usr/src/app

- Copy everything from the build stage, including those precious compiled gems

COPY --from=build /usr/src/app /usr/src/app

- Let Ruby know where the gems are—because it forgets if you don’t tell it

ENV GEM_PATH=/usr/src/app/vendor/bundle/ruby/3.3.0

ENV PATH=$GEM_PATH/bin:$PATH

- Install only the runtime dependencies needed for your app to vibe

RUN apk add --no-cache \

libstdc++ \ # C++ runtime

libffi \ # Needed by some gems (e.g., FFI, psych)

yaml \ # YAML parsing

zlib \ # Compression stuff

openssl \ # HTTPS, TLS, etc.

tzdata # So your logs don’t think it's 1970

- Declare yourself: prod mode on

ENV RACK_ENV=production

ENV PORT=8080

EXPOSE 8080

- Finally, launch the Ruby app like the main character it is

CMD ["ruby", "server.rb"]

Some useful commands:

docker build -t your-image-name .

docker images

docker run -p 8080:8080 your-image-id

docker rmi your-image-id

docker container prune

Pro Tips from the Underworld: If you're using gems that compile C extensions (like pg, nokogiri, ffi), you’ll likely need additional Alpine dependencies, e.g.:

RUN apk add --no-cache build-base libxml2-dev libxslt-dev postgresql-dev

For scripts that are long-running, consider using:

CMD ["ruby", "start.rb"]

Or even:

CMD ["rackup", "--host", "0.0.0.0", "--port", "8080"]

Deploying mdBook to GitHub Pages With GitHub Actions

Step 1: Setup the Repo

-

Create a new GitHub repo.

-

Run:

cargo install mdbook

mdbook init my-docs

cd my-docs

Step 2: Add GitHub Actions Workflow

- Create .github/workflows/deploy.yml:

name: Deploy mdBook to GitHub Pages

on:

push:

branches:

- main

jobs:

deploy:

runs-on: ubuntu-latest

steps:

- name: Checkout repository

uses: actions/checkout@v3

- name: Install mdBook

run: cargo install mdbook

- name: Build the book

run: mdbook build

- name: Setup SSH Authentication

run: |

mkdir -p ~/.ssh

echo "${{ secrets.SSH_PRIVATE_KEY }}" > ~/.ssh/id_ed25519

chmod 600 ~/.ssh/id_ed25519

ssh-keyscan github.com >> ~/.ssh/known_hosts

- name: Deploy to GitHub Pages

uses: peaceiris/actions-gh-pages@v3

with:

github_token: ${{ secrets.GITHUB_TOKEN }}

publish_dir: ./book

SSH_PRIVATE_KEY -> (id_ed25519)

GITHUB_TOKEN -> GitHub adds this automatically

Step 3: Add Secrets

- Generate a separate SSH key for CI/CD

ssh-keygen -t ed25519 -C "GitHub Actions Deploy Key"

-

Go to Repo -> Settings -> Secrets and Variables -> Actions

-

Add

SSH_PRIVATE_KEY-> Paste the private key (id_ed25519) -

Go to Repo -> Settings -> Deploy key

-

Paste the public key (

id_ed25519.pub)

Step 4: Enable Permissions

- Go to Repo -> Settings -> Actions -> General

- Under Workflow Permissions, enable: ✅ Read and Write Permissions ✅ Allow GitHub Actions to create and approve pull requests

Step 5: Push and Deploy

git add .

git commit -m "Deploy Book"

git push origin main

If it all goes well, your docs should be live.

CI/CD Pipeline Setup for Cloud Run

Deploy your projects automatically with a simple git commit and git push. To do this, you need to Install the gcloud CLI

Step 1: Test Locally with Docker

Build the image and test before pushing anything to Google Cloud.

docker build -t my-portfolio .

docker run -p 8080:8080 my-portfolio

- Fix any port, environment, or dependency issues locally first.

- Once it works locally, move on to Google Cloud.

Step 2: Set Up Google Cloud

-

Before running these commands, be sure to:

- Check current GCP project:

gcloud config list project- Set active project

gcloud config set project YOUR_PROJECT_ID- You can also view all projects your account can access:

gcloud projects list -

Enable the required APIs (run these in your terminal):

gcloud services enable \

cloudbuild.googleapis.com \

run.googleapis.com \

artifactregistry.googleapis.com

This ensures Google Cloud has all necessary services activated.

- Create an Artifact Registry repo for Docker images:

gcloud artifacts repositories create portfolio-repo \

--repository-format=docker \

--location=europe-west1 \

--description="Docker repository for portfolio deployment"

This stores your container images so Cloud Run can pull them.

Step 3: Create a Service Account for GitHub Actions

- Create a user for CI/CD:

gcloud iam service-accounts create github-deployer \

--description="GitHub Actions service account" \

--display-name="GitHub Deployer"

This creates a dedicated user for deploying the app.

- Grant it permissions:

gcloud projects add-iam-policy-binding $YOUR_PROJECT_ID \

--member=serviceAccount:github-deployer@$YOUR_PROJECT_ID.iam.gserviceaccount.com \

--role=roles/run.admin

gcloud projects add-iam-policy-binding $YOUR_PROJECT_ID \

--member=serviceAccount:github-deployer@$YOUR_PROJECT_ID.iam.gserviceaccount.com \

--role=roles/artifactregistry.writer

gcloud projects add-iam-policy-binding $YOUR_PROJECT_ID \

--member=serviceAccount:github-deployer@$YOUR_PROJECT_ID.iam.gserviceaccount.com \

--role=roles/storage.admin

GitHub Actions can now push images & deploy to Cloud Run.

- Generate a key file for the service account:

gcloud iam service-accounts keys create key.json \

--iam-account=github-deployer@$YOUR_PROJECT_ID.iam.gserviceaccount.com

This creates key.json, which contains the credentials.

Add Secrets to GitHub

-

Go to your GitHub repo -> Settings -> Secrets and Variables -> Actions

-

Add two secrets in Secrets -> repository secrets:

1.GCP_SERVICE_ACCOUNT_KEY → Copy & paste the full contents of key.json.

2.GCP_PROJECT_ID → Your Google Cloud project ID.

Now, GitHub Actions can authenticate with Google Cloud

Step 5: Create GitHub Actions Workflows (deploy.yml)

- In your repo, create: .github/workflows/deploy.yml

name: Deploy to Cloud Run

on:

push:

branches:

- main

jobs:

deploy:

runs-on: ubuntu-latest

steps:

- name: Checkout repository

uses: actions/checkout@v3

- name: Authenticate with Google Cloud

uses: google-github-actions/auth@v2

with:

credentials_json: ${{ secrets.GCP_SERVICE_ACCOUNT_KEY }}

- name: Set Up Google Cloud SDK

run: |

gcloud auth configure-docker europe-west2-docker.pkg.dev

- name: Build and push Docker Image

run: |

docker build -t europe-west1-docker.pkg.dev/${{ secrets.GCP_PROJECT_ID }}/portfolio-repo/portfolio .

docker push europe-west1-docker.pkg.dev/${{ secrets.GCP_PROJECT_ID }}/portfolio-repo/portfolio

- name: Deploy to Cloud Run

run: |

gcloud run deploy portfolio-site \

--image europe-west1-docker.pkg.dev/${{ secrets.GCP_PROJECT_ID }}/portfolio-repo/portfolio \

--platform managed \

--region europe-west1 \

--allow-unauthenticated

Now, every push to main will automatically deploy to Cloud Run.

Step 6: Push & Deploy

- Once everything is set up:

git add .

git commit -m "Setup GitHub Actions CI/CD"

git push origin main

Check GitHub Actions -> It should build & deploy your project automatically.

Adding a Domain to Google Cloud Run

I have unfortunately decided to swallow my pride and use the Google Cloud UI for this one.

Step 1: Set Up Domain Mapping

-

Go to the google cloud console -> select the Cloud Run service.

-

Click "Manage custom domains"

-

CLick Add Mapping -> "Add service domain mapping"

-

Select the service you want to map to -> select your deployed project.

-

Enter your domain name -> Click "Continue"

-

Google Cloud will generate DNS records -> copy these

Step 2: Update DNS Settings in Your Domain Host**

-

Go to your domain provider (Cloudflare, Namecheap, Google Domains, etc.).

-

Paste the DNS records exactly as given.

-

If you are using Cloudflare, set your records to "DNS Only" (disabling proxy mode) so Google can verify them.

Step 3: Verify the DNS Changes

- While waiting, feel free to test your domain name on nslookup.io.

- If the IPv4 and IPv6 addresses matches what Google gave you, then you're good.

Bonus: Enable Subdomains

- Bonus: in your domain host DNS settings, add * as a host, CNAME as type and ghs.googlehosted.com if you want subdomains.

-Now any subdomain (blog.yourdomain.com, api.yourdomain.com, etc.) will automatically work.

Fix: If Your Cloud Run Region Doesn’t Support Domain Mapping

🔥 If you see:

❌ "Domain mappings are not available in this region."

💀 Google Cloud decided your region isn’t good enough.

-

Just edit the YAML file in your repository to switch to a supported one.

-

Commit and push the change.

-

In your Cloud Run services, remove the old container.

How to configure Google Cloud Storage Bucket to store any files

- Create a Google Cloud Storage Bucket. Make sure to pick a unique bucket name!

- Example locations: us-central1, europe-west2, asia-east1.

gcloud storage buckets create gs://UNIQUE_BUCKET_NAME

--location=SERVER_LOCATION

--uniform-bucket-level-access

# Navigate to your folder path

cd ~/Downloads

# Upload the entire "public" folder

gsutil cp -r /public gs://UNIQUE_BUCKET_NAME

# Upload a single file, or an entire folder (later updates)

gsutil cp myfile.png gs://UNIQUE_BUCKET_NAME

gsutil cp -r myfolder gs://UNIQUE_BUCKET_NAME

# Automate Upload with Wildcards (this uploads all .jpg files in the current directory)

gsutil cp *.jpg gs://UNIQUE_BUCKET_NAME

# (Optional) Make files Public. This will make the files publicly accessed via URL.

gsutil iam ch allUsers:objectViewer gs://UNIQUE_BUCKET_NAME

# Access to an image in the folder with your web browser, once uploaded.

https://storage.googleapis.com/UNIQUE_BUCKET_NAME/your-file.jpg

# List Files in the Bucket

gsutil ls gs://UNIQUE_BUCKET_NAME

# Delete Files (if needed)

gsutil rm gs://UNIQUE_BUCKET_NAME/filename.png

Set Up PostgreSQL in a Google Cloud VM using Docker Compose

1. Google Cloud Prep

- Make sure you have a Google Cloud Project set up.

- Enable Billing, Compute Engine, and Cloud SQL Admin API.

- Create a VM instance (Debian, obviously).

2. Generate SSH key locally:

ssh-keygen -t rsa -b 4096 -C "pwatpwat@yourdomain.dev"

Hit Enter a few times to use default paths (~/.ssh/id_rsa).

3. Connect to the VM instance

Connect

gcloud config set project pwatgres

pwatgres = project name.

- Check if the first connection worked:

gcloud config list

- Add your SSH key to the project:

gcloud compute os-login ssh-keys add --key-file=~/.ssh/id_rsa.pub

- Confirm access:

gcloud compute ssh pwat-db-vm --zone=europe-west1-b

Basic Post-Boot Hardening

Firewall with ufw:

sudo apt update && sudo apt upgrade -y

sudo apt install ufw -y

sudo ufw allow OpenSSH

sudo ufw enable

Fail2Ban (basic brute-force protection)

sudo apt install fail2ban -y

sudo systemctl enable fail2ban

sudo systemctl start fail2ban

4. Docker Setup

sudo apt update && sudo apt install docker.io -y

sudo systemctl enable docker

sudo systemctl start docker

- Test if the daemon hears your call:

docker --version

- Install Docker Compose:

sudo apt install docker-compose -y

docker-compose --version

- Let Yourself Command the Docker Army

sudo usermod -aG docker $USER

newgrp docker

You now have Docker privileges without needing sudo every time like a mortal.

5. Create Docker Compose Project

mkdir ~/pwatgres && cd ~/pwatgres

nano docker-compose.yml

version: '3.8'

services:

postgres:

image: postgres:16

restart: always

container_name: pwatgres

env_file:

- .env

ports:

- "5432:5432"

volumes:

- pgdata:/var/lib/postgresql/data

volumes:

pgdata:

- Create the .env file

Inside ~/pwatgres/:

nano .env

Example contents:

POSTGRES_DB=mydb

POSTGRES_USER=admin

POSTGRES_PASSWORD=changemepls

Save and close. DO NOT commit this if you ever sync this repo.

You can lock this .env file down with:

chmod 600 .env

Deploy that beast

docker-compose up -d

Misc

- To shut down gracefully:

sudo shutdown +1 "The API layer dreams tonight. Goodnight, sweet daemon."

Security: Avoid Paying for Google’s Mistakes

- Set up a billing alert. If your database starts scaling up unnecessarily, you will get charged.

- Limit instance size in Compute Engine (e.g., ec2-nano).

Create an HMAC Server API with Python

from fastapi import FastAPI, Request, HTTPException

import hmac

import hashlib

app = FastAPI()

def calc_digest(key, message):

key = bytes(key, 'utf-8')

message = bytes(message, 'utf-8')

dig = hmac.new(key, message, hashlib.sha256)

return dig.hexdigest()

# HMAC Server

@app.post("/verify")

async def verify_signature(request: Request):

body = await request.json()

recieved_mac = request.headers.get("X-HMAC-Signature")

if not recieved_mac:

raise HTTPException(status_code=400, detail="Missing HMAC header")

msg_string = f"{body['mac_address']}:{body['timestamp']}"

expected_mac = calc_digest('secret-key', msg_string)

if not hmac.compare_digest(recieved_mac, expected_mac):

raise HTTPException(status_code=403, detail="Invalid signature")

return {"status": "Verified"}

Testing HMAC Protected Endpoints with curl (Bash Script)

#!/bin/bash

MESSAGE='{"mac_address":"12:34:56:78:9a:bc","timestamp":"2025-04-30T15:00:00"}'

SIGNATURE=$(echo -n '{"mac_address":"12:34:56:78:9a:bc","timestamp":"2025-04-30T15:00:00"}' |

openssl dgst -sha256 -hmac "secret-key" | sed 's/^.* //')

curl -X POST http://127.0.0.1:8000/verify \

-H "Content-Type: application/json" \

-H "X-HMAC-Signature: $SIGNATURE" \

-d "$MESSAGE"

Establish connection with an HMAC client (for example, with Ruby)

require 'openssl/hmac'

require 'mac-address'

# HMAC Client

class Hmac

def self.call

key = secret_key

mac_address = MacAddress.address

halt 404, 'Mac Address not found' if mac_address.nil?

timestamp = Time.now.to_i

message = "#{mac_address}:#{timestamp}"

mac = calc_digest(key, message)

{ signature: mac, timestamp: timestamp, mac_address: mac_address }

end

def self.secret_key

ENV['API_DB_KEY'] || raise('Missing API_DB_KEY')

end

def self.calc_digest(key, message)

OpenSSL::HMAC.hexdigest('sha256', key, message)

end

end

Set up a LAMP server

Prerequisites: Type 2 hypervisor. In this guide, we're going to use VirtualBox (but you can always use virt-manager on Linux).

Configuring a Debian VM:

- Downloading Debian on www.debian.org (this will be the operating system on which will run the virtual machine.

- Download, install and configure VirtualBox and VirtualBox Extension Pack

- For VirtualBox: accept everything and proceed.

- For VirtualBox Extensions Pack:

- Open the downloaded file

- Click on Installation

- Accept the terms of service

- Tools → Extensions hamburger menu to check that the extension is installed.

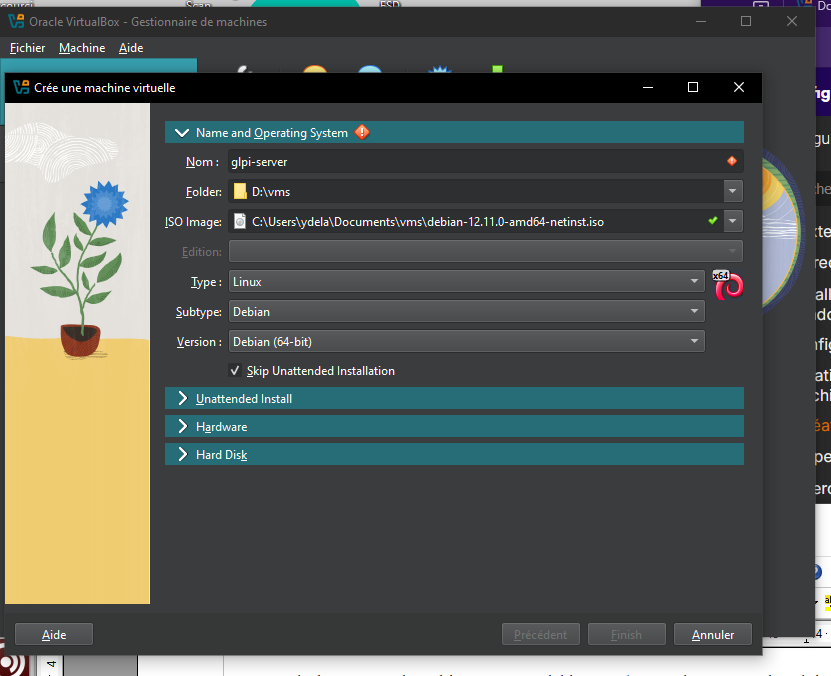

- Creation of the Debian Virtual Machine:

- Choose an appropriate name for the VM

- Choose the Debian ISO image you downloaded earlier

- Check "Skip Unattended Installation"

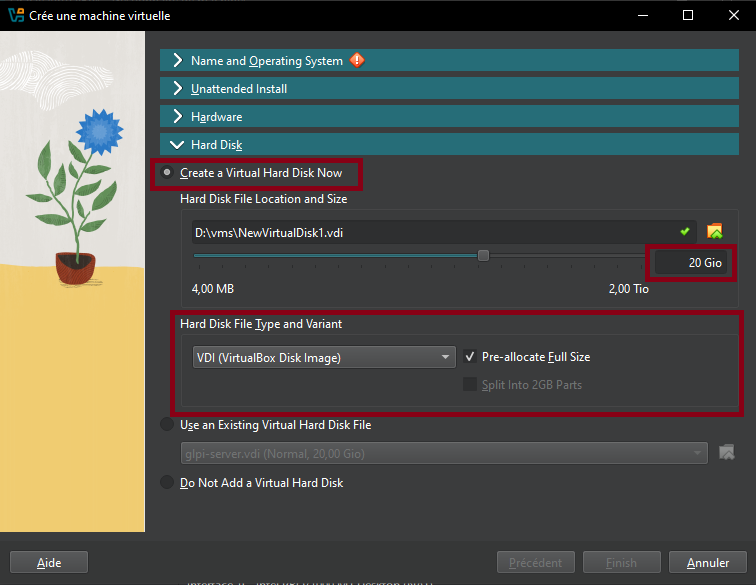

- Resources to be assigned: 2048MB of RAM, 1-2 CPUs and 10-20GB of hard drive.

- Click "Finish", and you should see the machine appear in the VM list if there are no problems.

Additional Configurations:

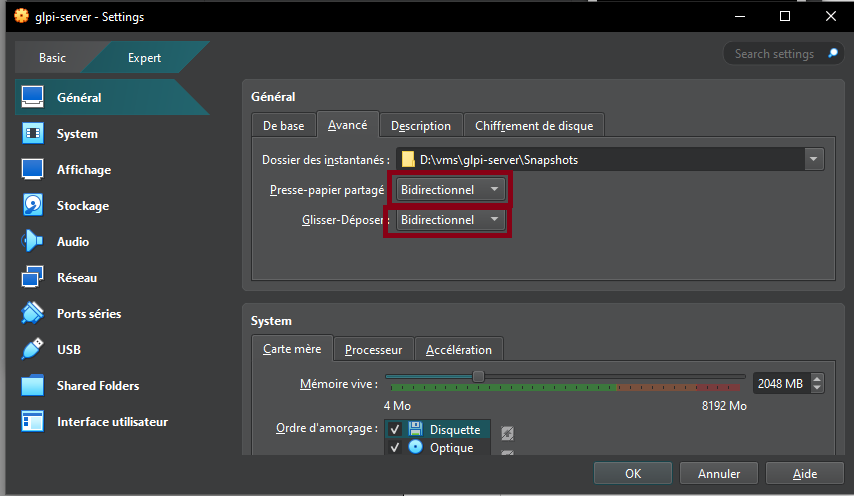

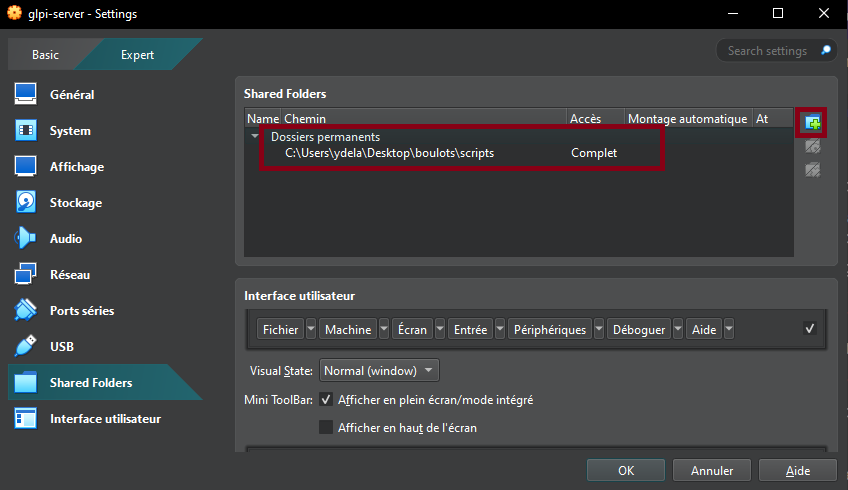

- Enable shared clipboard and drag and drop in General → Advanced

- Enable shared folders in Settings → Shared Folders (here I added a scripts folder)

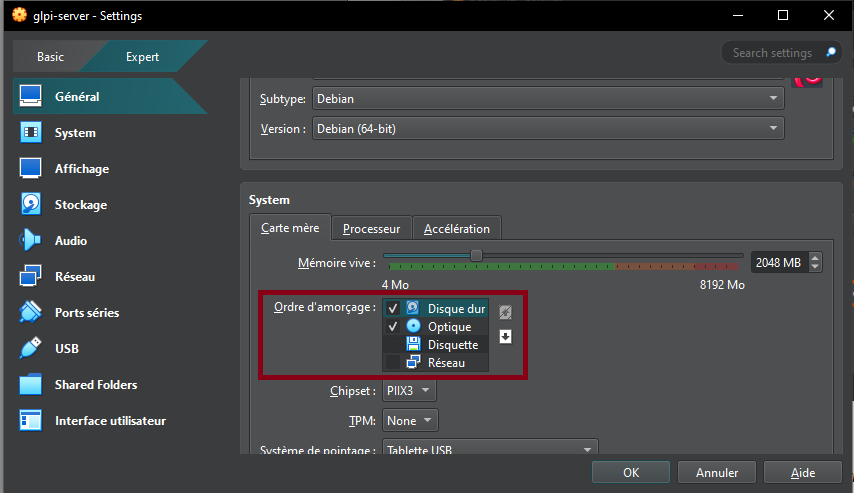

In the "System" tab, change the boot order to:

- Hard Drive (Checked)

- Optical Drive (Checked)

- Floppy Drive (Unchecked)

- Network Drive (Unchecked)

- In the "Display" tab → Screen, increase the "Video Memory" slider to 128MB and check the "Enable 3D Acceleration" box.

- In the "Network" tab, check that the "Enable Network Interface" and "Cable Connected" boxes are enabled. The network access mode must also be set to "NAT".

- In the "USB" tab, enable the USB controller and check the "USB 3.0 Controller (xHCI)" button.

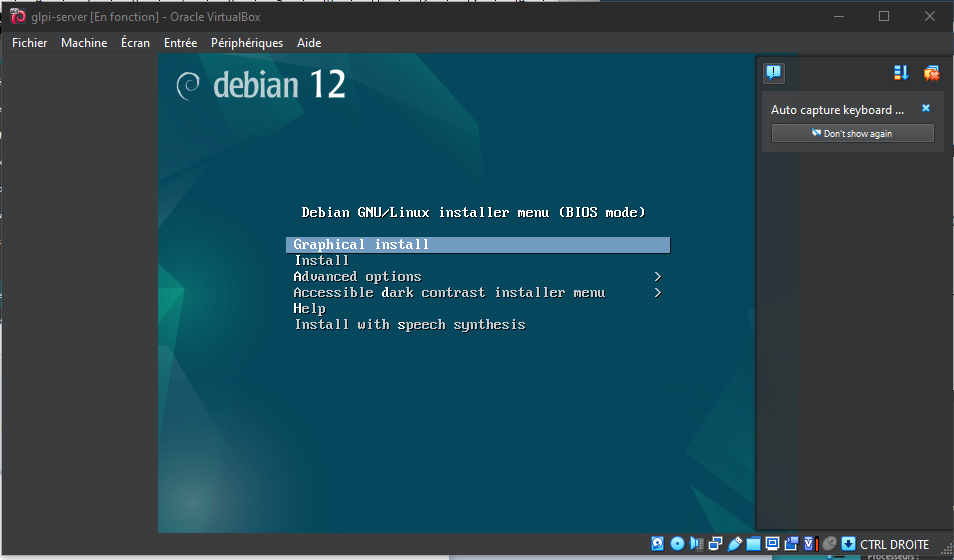

You may finally boot up the Debian Virtual Machine.

- Partition formatting: Yes.

- At the taskbar step, Debian will ask you to choose which software to pre-install. You must check "SSH Server," leave "Common System Utilities" checked, and check "XFCE Desktop", or a different one of your convenience.

- Install the GRUB boot program: Yes, and select the disk that appears.

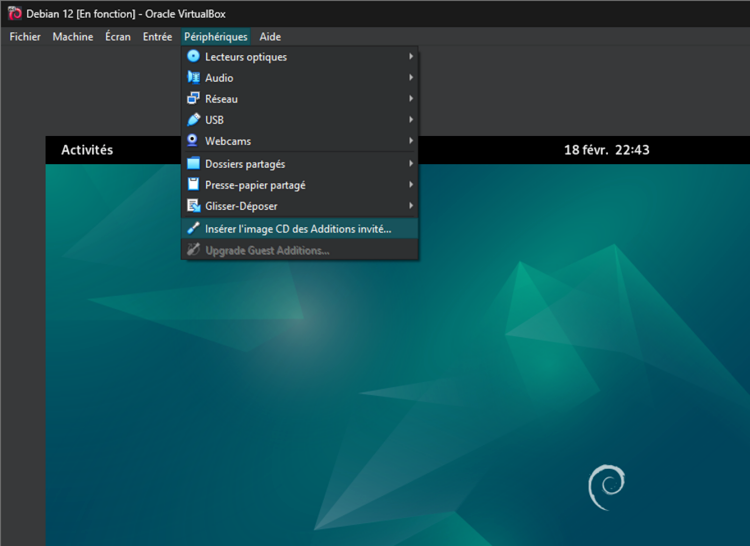

VirtualBox Guest Additions:

- In the menu bar, click "Devices", then "Insert Guest Additions CD Image".

- Access the optical drive that just appeared.

- Right-click → open a terminal.

- Become root by running the su command and entering your password.

- Enter the command sh ./VboxLinuxAdditions.run to start the installation of the "Guest Additions".

- Restart the VM.

- Make sure that in the "Devices" menu, "Shared Clipboard" and "Drag & Drop" are checked to "Bidirectional".

- Right Ctrl + f: to enable/disable full screen.

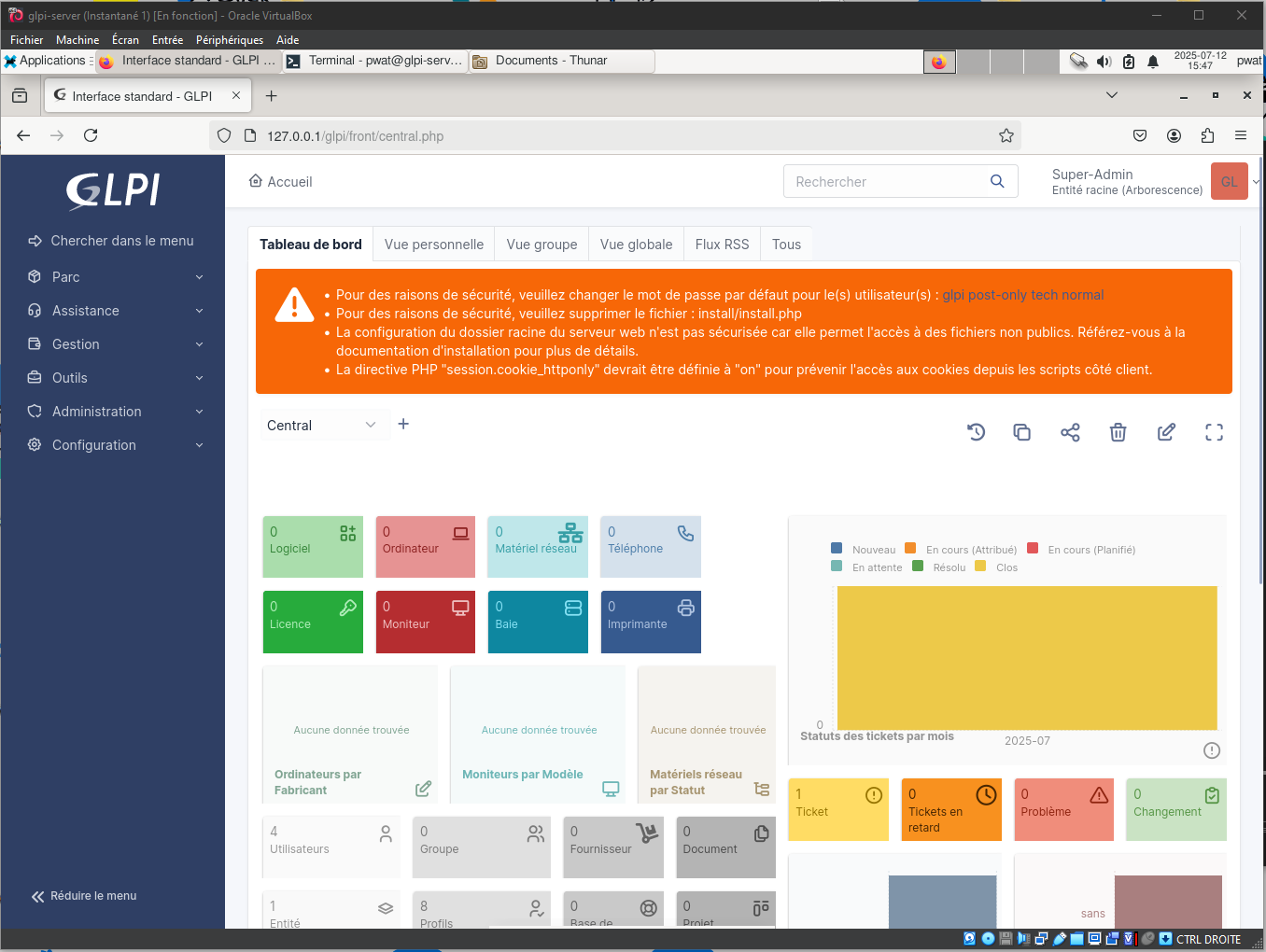

GLPI Installation

- Once Debian is installed, create a Bash script called setup_glpi.sh that will set up the LAMP stack. This script requires root access.

- The LAMP stack consists of the Apache2 web server, a MariaDB database and the PHP language.

- If "sudo" doesn't work for some reason, you can use "su" instead for the time being. In my situation, I had to install it manually from apt and grant my own user to the sudo group.

#!/usr/bin/env bash

# setup_glpi.sh

set -e

# before running the script, make sure to do chmod +x setup_glpi.sh

# this script needs root permissions. run it with sudo ./setup_glpi.sh

echo "Updating and upgrading..."

apt update && apt upgrade -y

echo "Installing Apache..."

apt install apache2 -y

echo "Installing MariaDB..."

apt install mariadb-server -y

echo "Securing MariaDB..."

mysql_secure_installation

echo "Installing PHP + extensions..."

apt install php php-mysql php-xml php-mbstring php-curl php-gd php-ldap php-imap php-zip php-intl libapache2-mod-php -y

echo "Restarting Apache..."

systemctl restart apache2

- Connect to the MariaDB database to create a database and user for GLPI

sudo mysql -u root -p

# In the MySQL shell:

CREATE DATABASE glpidb;

CREATE USER 'glpiuser'@'localhost' IDENTIFIED BY 'pwatpwat';

GRANT ALL PRIVILEGES ON glpidb.* TO 'glpiuser'@'localhost';

FLUSH PRIVILEGES;

EXIT;

- Download and deploy GLPI to the virtual machine

cd /tmp

wget https://github.com/glpi-project/glpi/releases/download/10.0.13/glpi-

10.0.13.tgz

tar -xvzf glpi-10.0.13.tgz

# Move it to web root

sudo mv glpi /var/www/html/

# Set permissions

sudo chown -R www-data:www-data /var/www/html/glpi

sudo chmod -R 755 /var/www/html/glpi

# Enable Apache mod_rewrite just in case

sudo a2enmod rewrite

sudo systemctl restart apache2

- Open your web browser

- Type

ip a | grep inetin the terminal to find the machine's IP address - Enter this IP address in your browser, and you should see the default Apache 2 page.

- Append /glpi to the address, and you should see the GLPI installation page.

Problems that may arise during installation:

- If you forgot a PHP extension, the installer will error out. You will need to find it and install it in the terminal (

apt install php-xxx) and then restart the Apache 2 server (systemctl restart apache2).

Some example GLPI configuration:

GLPI Database:

Server: localhost

User: glpiuser

Password: pwatpwat

Use an existing database: glpidb

The default usernames and passwords are:

- glpi/glpi for the administrator account

- tech/tech for the technician account

- normal/normal for the normal account

- post-only/postonly for the postonly account

You can delete or modify these accounts as well as the initial data. And with that, the GLPI server has been successfully installed!

Once you are able to see a result similar to this screenshot, it is safe to remove the GLPI installation file with:

rm /var/www/html/glpi/install/install.php

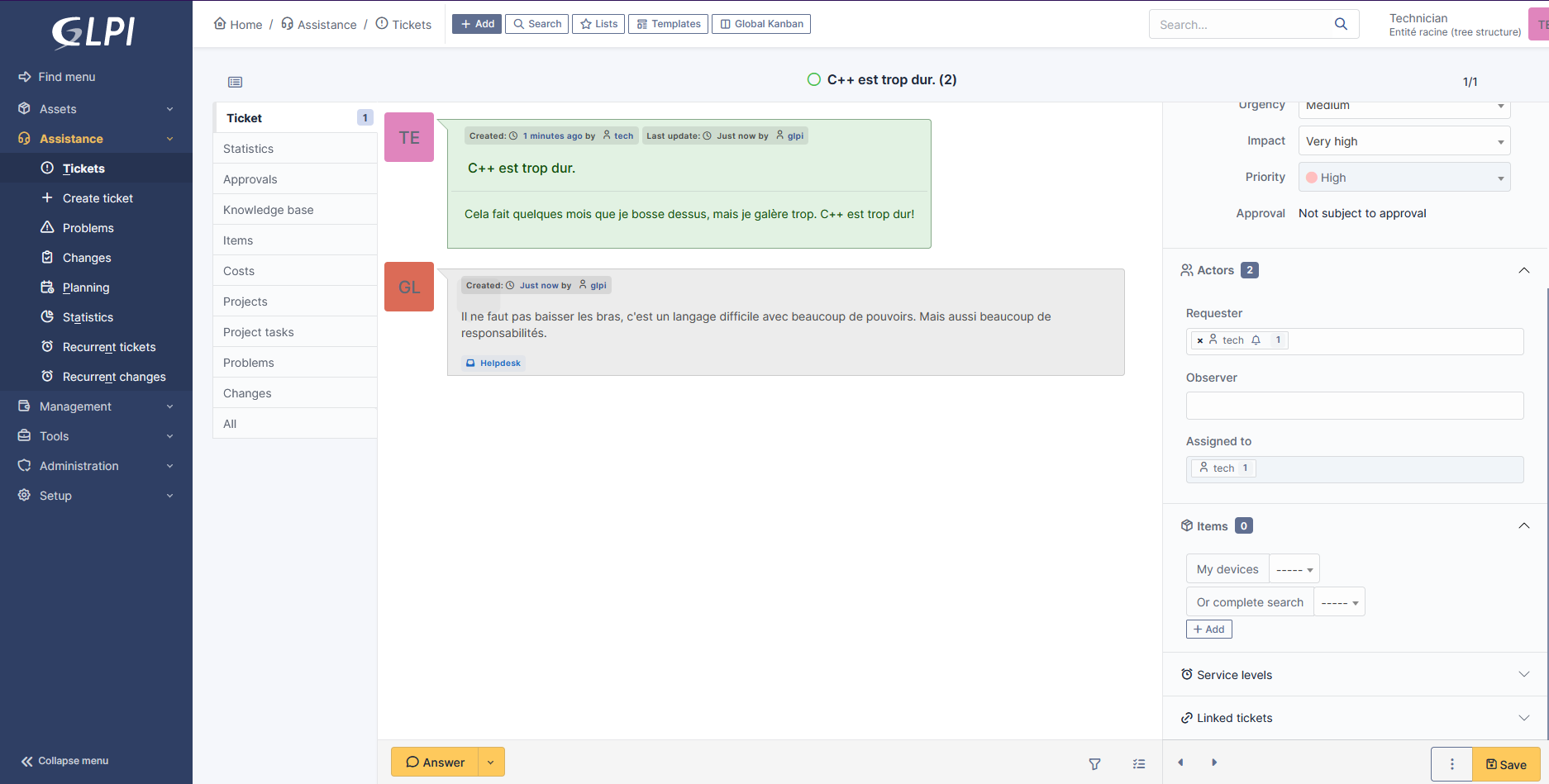

Connect to GLPI from a computer on the same network

-

From the VM, type

ip ato find the IP address to connect to. -

Since NAT is used for the VM, in VirtualBox you will need to go to VM → Configuration → Network → Adapter 1 → Port Forwarding.

-

Add a rule:

Name: HTTP Protocol: TCP Host IP (leave blank) Host port: 8080 Guest IP (leave blank) Guest port: 80 -

Then, on the host machine, access the address: http://localhost:8080/glpi

-

If all goes well, you have successfully connected to your VM's Apache server from the host machine. Log in with the Technician account, for example.

Create a snapshot

It's important to create snapshots regularly in case of mishandling.

- Click the VM's hamburger menu

- Snapshots

- Click "Take" and add a title and description.

Virt Manager Install on Arch Linux

- First, update the system:

sudo pacman -Syu

- Install virt-manager and its dependencies:

sudo pacman -S virt-manager qemu vde2 ebtables dnsmasq bridge-utils openbsd-netcat

- Enable and start the

libvirtdservice:

sudo systemctl enable libvirtd.service

sudo systemctl start libvirtd.service

- Add your user to the

libvirtandkvmgroups:

sudo usermod -aG libvirt $USER

sudo usermod -aG kvm $USER

You may need to log out and log back in for the group changes to take effect.

And you’ll be able to:

- Create VMs from ISO images

- Use bridged networking or NAT (for proper sysadmin testing)

- Assign cores, RAM, disk

- Take snapshots

Creating a New Virtual Machine

-

Open Virtual Machine Manager

-

Click "Create a new virtual machine"

-

Choose "Local Install Media (ISO)"

-

Select your ISO

-

Assign CPU and Memory

- 2 CPUs and 4GB Ram for Windows 7, for example.

- Create a disk

- Allocate an appropriate size.

- Make it qcow2 format

- Check "Customize configuration before install"

Customizing Before Install

-

Check that Firmware is set to BIOS

-

Add the VirtIO ISO

- You need to grab the appropriate version from here

- Go to “Add Hardware” → Storage → , Select or create custom storage, Add the VirtIO ISO

- Set it as CD-ROM, SATA

Using Virt Manager and Samba to retrieve my old songs

-

FL Studio does not work on Linux

-

I have found a backup of my songs, they are neither .wav or .flac files but FL Studio project files.

-

The solution is to open an instance of FL Studio in a Windows 7 Virtual Machine, and export those project files in .wav from there. In this guide I will use Samba to transfer files between the host and the VM.

-

For the VirtIO ISO: grab

virtio-win-0.1.173.isofor example, Windows 7 support has ended in newer versions.

After having configured the Windows 7 Virtual Machine, access the virtio-win ISO that has been attached as a CD-ROM via Virt Manager. Use the installer located inside the guest-agent folder.

- If the installation has been successful, you can turn off the virtual machine for now.

Samba File Sharing Setup (for transferring files in/out of Win7)

Step 1: Virtual Network Interface

Here is how you can display your available Network Interfaces:

ip link show

You could also use nmcli:

nmcli device status

OR:

nmcli connection show

If virbr0 isn't showing up here, try this:

sudo virsh net-start default

With this command, virbr0 should appear when using the nmcli device status command.

- Inside Virt-Manager, change the network settings of the VM to:

- Network source: Bridged device...

- Device name: virbr0

- Device model: e1000e

- MAC address: yes

Step 2: Install Samba on the host

- On the host machine:

sudo pacman -S samba

Step 3: Backup the default smb.conf file, then edit it

sudo mv /etc/samba/smb.conf /etc/samba/smb.conf.bak

sudo nano /etc/samba/smb.conf

Here is a configuration example (smb.conf):

[global]

server string = Arch Server

workgroup = RINCORP

security = user

map to guest = Bad User

name resolve order = bcast host

include = /etc/samba/shares.conf

Then, create the shares.conf file:

sudo nano /etc/samba/shares.conf

This is an example for Public files:

[vmshare]

path = /share/vmshare

force user = smbuser

force group = smbgroup

create mask = 0664

force create mode = 0664

directory mask = 0775

force directory mode = 0775

public = yes

writable = yes

This is an example for Protected files:

[Protected Files]

path = /share/private_share

force user = smbuser

force group = smbgroup

create mask = 0664

force create mode = 0664

directory mask = 0775

force directory mode = 0775

public = yes

writable = no

- Run this command to check for syntax errors in the config file:

testparm -s

Step 4: Create those folders

sudo mkdir -p /share/vmshare

sudo mkdir /share/private_share

Confirm their existence:

ls -l share/

Step 5: Create users and groups

sudo groupadd --system smbgroup

cat /etc/group

sudo useradd --system --no-create-home --group smbgroup -s /bin/false smbuser

cat /etc/passwd

sudo chown -R smbuser:smbgroup /share

sudo chmod -R g+w /share

Step 6: Restart Samba

sudo systemctl restart smb

sudo systemctl status smb

Here is where you can see the Samba logs:

cat /var/log/samba/log.smbd

Step 7: Windows BS

On the client Windows 7 machine:

- Open the Run command and type "secpol.msc"

- Click on "Local Policies": "Security Options"

- Change Network security: LAN Manager Authentication Level to “Send NTLMv2 response only”

- Change Network security: Minimum Session Security for NTLM SSP to disable “Require 128-bit encryption” into “No Minimum Security”.

Press Win + R, type:

\\<your-host-IP>\vmshare

If this works, you can map it as a network drive:

- Right click Computer: Map network drive

- Pick a drive letter

- Put the path

\\<your-host-IP>\vmshare - Check "Reconnect at logon"

Step 8: Snapshot and Profit

It is a good idea to create a snapshot at this point.

- Go to the virtual machine viewer, and click "Manage VM snapshots"

- Click on the "plus" button located in the bottom left corner, provide a name and description for the snapshot, and click on the "Finish" button. In my case, I have gone for an external snapshot.

I will now load the suspicious FL studio installer into this vmshare folder, and export my old songs.

This chapter will mostly cover Debian-type distributions.

Setting up nftables Firewall Rules For Debian-type Distributions

Before diving into configurations, you might want to check if nftables is already installed and active on your Debian system.

- Check if nftables is Installed

dpkg -l | grep nftables

- If it's installed, you'll see an entry like:

ii nftables 0.9.8-3 amd64 Netfilter nf_tables userspace utility

- If it's not installed, install it using:

sudo apt update && sudo apt install nftables

- Check if nftables is running

sudo systemctl status nftables

Expected output if running:

● nftables.service - Netfilter Tables Loaded: loaded (/lib/systemd/system/nftables.service; enabled; vendor preset: enabled) Active: active (exited) since …

If it is inactive or stopped, you can start and enable it:

sudo systemctl enable --now nftables

Step 1: Defining a Firewall

These following commands will:

-

Define a Firewall

-

Create a new table named

filterin the IPv4(ip) family. -

Create a chain inside

filterto process incoming traffic (input). -

It sets the hook to "input" (i.e., traffic directed at this machine).

-

Priority 0 means it runs after other kernel hooks.

-

sudo nft add rule ip filter input dropDrops all incoming traffic by default. This means no connections are allowed unless explicitly permitted later. -

sudo nft list ruleset -aDisplays the current ruleset, including handle numbers, which are useful if you need to modify or delete specific rules. -

sudo nft delete rule ip filter input handle 2Deletes the rule with handle 2 (you need to check the handle number in your setup).

sudo nft add table ip filter

sudo nft add chain ip filter input {type filter hook input priority 0\;}

sudo nft add rule ip filter input drop

sudo nft list ruleset -a

sudo nft delete rule ip filter input handle 2

Step 2: Enable Specific Ports

These following commands:

- Allows SSH (port 22) connections if they are:

- New (first time a connection is made).

- Established (continuing an existing session).

inetsupports both IPv4 and IPv6 in one go.- Opens ports 22 (SSH), 80 (HTTP), and 443(HTTPS).

sudo nft add rule inet filter input tcp dport 22 ct state new,established accept

sudo nft add rule inet filter input tcp dport { 22, 80, 443 } ct state new,established accept

Step 3: Save & Persist the Firewall

- Save the current firewall rules into a file named

firewall.config. - Reload the firewall from the saved configurations.

sudo nft list ruleset > firewall.config

sudo nft -f firewall.config

Reloads the firewall from the saved configuration.

- If you want to persist the rules across reboots, enable the systemd service:

sudo systemctl enable nftables.service

Avoiding the Network Cut-off Problem

Firewall misconfiguration can lock you out if you're SSH-ing into a remote server. Here’s how to avoid getting locked out:

- Always Allow SSH First Before you apply the drop-all rule, make sure to allow SSH connections first:

sudo nft add rule inet filter input tcp dport 22 ct state new,established accept

Then you can safely run:

sudo nft add rule ip filter input drop

-

Have a Backup Terminal

- Open a second SSH session before applying firewall rules.

- If something goes wrong, you can restore settings from the backup session.

-

Use a "Grace Period" Rule Instead of locking yourself out immediately, you can set a temporary rule that auto-expires:

sudo nft add rule ip filter input tcp dport 22 accept timeout 5m

This allows SSH access for 5 minutes, giving you time to fix mistakes before the rule disappears.

How to set up the ufw firewall

sudo pacman -S ufw

sudo ufw default deny incoming

sudo ufw default allow outgoing

sudo ufw allow samba

sudo ufw enable

If sudo ufw allow samba for example, does not work:

Create the file:

sudo nano /etc/ufw/applications.d/samba

Paste the content:

[Samba]

title=LanManager-like file and printer server for Unix

description=The Samba software suite is a collection of programs that implements the SMB/CIF$

ports=137,138/udp|139,445/tcp

🔍 CLI Tools to See Background Services (the Cool Girl Terminal Way)

🧙♀️ ps aux

This is the classic spell for peeking into the underworld of processes.

ps aux

- Lists all processes.

- USER, PID, %CPU, %MEM, and the command path.

- Pipe it to less or grep for sanity.

Example: See what’s using Postgres

ps aux | grep postgres

top / htop (More Visual)

top: Built-in, real-time process overview.

htop: Fancy, colored, scrollable version. (You will want this.)

htop

Install it with:

sudo apt install htop # Debian-based

# or

sudo xbps-install -S htop # Void Linux

Use F10 to exit, arrow keys to scroll, and F9 to send kill signals like a Linux grim reaper.

🧼 List Only Services

🚫 systemd:

If you're on a systemd-based distro (not Void, so skip this if you're on musl Void), use:

systemctl list-units --type=service

☠️ runit (Void Linux)

If you're using Void: you’re blessed. You get runit, not that systemd drama.

To list services:

sv status /var/service/*

Each service will say run if active.

You can stop services with:

sudo sv stop <service>

Start them:

sudo sv start <service>

Build something from source

tar -xvzf fftw-<version>.tar.gz

cd fftw-<version>

./configure --enable-shared

make

sudo make install

This chapter will mostly cover PostgreSQL.

PostgreSQL Local Setup Guide

Use the postgres superuser to create a new user, a new database and manage their permissions. For all future database operations and for production, only use the created my_project_user.

The following guide might seem a little over-engineered for a casual app, but it will ensure a level of security conform to production level applications.

Step 1: Login as superuser

sudo -i -u postgres psql

if it fails, you may need to restart Postgres with:

/etc/init.d/postgresql restart

- The default Postgres installation comes with a superuser called postgres.

- We use this account to set up new users and databases.

You can fill in with your own informations, store them in a file (such as setup.sql) and use them in production.

Step 2: Create a New Database, Two New Users and A Separate Schema

- Inside the Postgres shell (

psql) run (or better: write into yoursetup.sqlfile):

-- Create a database user (replace with a strong password) and a temporary admin

CREATE USER temp_admin WITH PASSWORD 'temp_admin_password';

CREATE USER my_project_user WITH PASSWORD 'supersecurepassword';

-- Create the database and assign ownership to the user

GRANT my_project_user TO temp_admin;

CREATE DATABASE my_project_db OWNER temp_admin;

GRANT CONNECT ON DATABASE my_project_db TO my_project_user;

-- If you want isolation from the default public schema, create a custom schema:

CREATE SCHEMA my_project_schema AUTHORIZATION my_project_user;

ALTER DATABASE my_project_db SET search_path TO my_project_schema;

GRANT USAGE ON SCHEMA my_project_schema TO my_project_user;

- This ensures that your database is not owned by the postgres superuser.

- The my_project_user will have full control over my_project_db, but no power over system-wide databases.

- From here, this guide assumes you have created my_project_schema.

Step 3: Restrict Dangerous Permissions

By default, new users can create or drop objects inside the project schema. We don’t want that.

-- Explicitly grant CREATE on schema

GRANT CREATE ON SCHEMA my_project_schema TO my_project_user;

-- Explicitly remove DROP privileges on existing tables

REVOKE DROP ON ALL TABLES IN SCHEMA my_project_schema FROM my_project_user;

ALTER DEFAULT PRIVILEGES FOR ROLE my_project_user IN SCHEMA my_project_schema

REVOKE DROP ON TABLES FROM my_project_user;

- This prevents accidental database-wide modifications.

- The user will still be able to read and modify existing tables.

Step 4: Enforce Security Best Practices

You should prevent the user from becoming a superuser, creating other databases and creating new users.

ALTER USER my_project_user WITH NOSUPERUSER NOCREATEDB NOCREATEROLE;

Step 5: Allow CRUD Operations

-- Grant CRUD operations to the user, and ensure it has access to future tables as well

ALTER DEFAULT PRIVILEGES FOR ROLE my_project_user IN SCHEMA my_project_schema

GRANT SELECT, INSERT, UPDATE, DELETE ON TABLES TO my_project_user;

Step 6: Grant Usage on Sequences (Critical for Auto Increments)

ALTER DEFAULT PRIVILEGES FOR ROLE my_project_user IN SCHEMA my_project_schema

GRANT USAGE, SELECT, UPDATE ON SEQUENCES TO my_project_user;

Step 7: Drop temp_admin

Since at this point, temp_admin has only been used to create a new database, it still has full ownership and is a security risk. You should reassign everything and then delete it. If you want, you can always keep it and modify its permissions separately, but this is a pragmatic and secure solution.

-- Reassign all objects owned by temp_admin to my_project_user

REASSIGN OWNED BY temp_admin TO my_project_user;

-- Remove any remaining privileges

DROP OWNED BY temp_admin;

-- Finally, delete the user

DROP USER temp_admin;

Step 8: Exit and Verify Setup

\l: List all databases

\du: List all users and their roles

\q: Exit Postgres shell

- Show a user's privilege:

SELECT * FROM information_schema.role_table_grants

WHERE grantee='my_project_user';

Step 9: Connect as the New User

If you have created a setup.sql file with the informations above and filled in with your own data, you can import it into Postgres with this simple command:

psql -U postgres -f setup.sql

Now test logging into your database as the newly created user:

psql -U my_project_user -d my_project_db

Troubleshooting

- Delete database/user (if you messed up, can happen):

DROP DATABASE my_project_db;

DROP USER my_project_user;

- If Postgres refuses to drop a database because it's in use, force disconnect users before deleting:

SELECT pg_terminate_backend (pid)

FROM pg_stat_activity

WHERE datname='my_project_db';

This correctly finds active connections and terminates them.

- If Postgres refuses to drop the user because they still own objects, you might need to do this before dropping the user:

REASSIGN OWNED BY my_project_user TO project_admin;

DROP OWNED BY my_project_user;

DROP USER my_project_user;

- Find Which Database a User Owns

SELECT datname, pg_catalog.pg_get_userbyid(datdba) AS owner

FROM pg_database;

Add the User to your backend .env File

DATABASE_URL=postgres://my_project_user:supersecurepassword@localhost/my_project_db

- This keeps credentials outside of the codebase.

- Use environment variables instead of hardcoding credentials.

Rate Limiting With PostgreSQL

If you have a small app, you do not need to setup an entire new Redis instance. You can instead build your own 'poor mans Redis', with unlogged tables (faster writes and no WAL overhead) and automatic cleanup with a cron job. In this guide, you will learn how to add a rate limiting feature directly onto PostgreSQL, which is useful to greatly reduce the risk of brute force attacks.

These queries are meant to be run from your backend application file, not from psql.

Language Compatibility Notice

- This SQL syntax ($1, $2, etc...) is compatible with Ruby, JavaScript, and Go.

- If using Python (psycopg2), replace $1 with %s.

- If using Java (JDBC), use ? placeholders instead.

- Regardless of the language, make sure to use parametrized queries to prevent SQL injection.

1. The Rate-Limiting Table

Since this is login-related, we can use an UUID identifier and timestamps.

CREATE UNLOGGED TABLE login_attempts (

session_id UUID DEFAULT gen_random_uuid(), -- Secure, unique session tracking

username TEXT NOT NULL,

attempt_count INT DEFAULT 1,

first_attempt TIMESTAMP DEFAULT now(),

PRIMARY KEY (session_id, username) -- Prevent duplicate session-user pairs

);

- Unlogged -> Faster writes, no WAL overhead.

- UUID session identifiers are more reliable than tracking IP addresses -> no risk of blocking users with shared IP, or letting botnets or spoof IPs pass.

2. When a Login Attempt Happens

Now, inserting into this table will automatically generate a secure, unique session identifier.

INSERT INTO login_attempts (username, attempt_count)

VALUES ($1, 1)

ON CONFLICT (username)

DO UPDATE SET

attempt_count = login_attempts.attempt_count + 1

first_attempt = CASE

WHEN login_attempts.first_attempt <= now() - INTERVAL '20 minutes'

THEN now()

ELSE login_attempts.first_attempt

END;

- If it’s a new user, it gets inserted.

- If it already exists, it updates only if the time window hasn’t expired.

- If it has expired, the row stays the same (so it doesn’t increment forever).

3. Checking If the UUID is Blocked

Before processing a login attempt, check if the UUID should be blocked.

SELECT attempt_count FROM login_attempts

WHERE username = $1

AND first_attempt > now() - INTERVAL '20 minutes';

If attempt_count > 5, deny the login request.

4. Automatically Cleaning Up Old Records

- Once an IP ages out of the 20-minute window, we don’t need to track it anymore.

- This step requires a PostgreSQL extension, pg_cron, which you can find here: pg_cron

- Then, you might want to alter your default database configuration file (which you have hopefully created first by following this guide.

ALTER USER my_project_user SET cron.job_run_as_owner = true;

- Set up the pg_cron extension:

CREATE EXTENSION pg_cron;

CREATE OR REPLACE FUNCTION cleanup_old_attempts() RETURNS VOID AS $$

DELETE FROM login_attempts WHERE first_attempt < now() - INTERVAL '20 minutes';

$$ LANGUAGE sql;

-- Auto clean up of old attempts, every 5 minutes

SELECT cron.schedule('*/5 * * * *', $$SELECT cleanup_old_attempts()$$);

- Keeps the table lightweight instead of storing old attempts forever.

- Runs every 5 minutes, but you can tweak as needed.

For deployment

Google Cloud SQL supports pg_cron, but you have to manually enable it since it's disabled by default.

- Go to Google Cloud Console

- Navigate to your PostgreSQL instance

- Enable pg_cron extension

- Go to Configuration -> Flags

- Add a new flag:

shared_preload_libraries = 'pg_cron'- Click 'Save Changes & Restart the instance'.

How to Boot Up Redis

1.Installing Redis on Debian

Add the repository to the APT index, update it, and install Redis:

sudo apt-get install lsb-release curl gpg

curl -fsSL https://packages.redis.io/gpg | sudo gpg --dearmor -o /usr/share/keyrings/redis-archive-keyring.gpg

sudo chmod 644 /usr/share/keyrings/redis-archive-keyring.gpg

echo "deb [signed-by=/usr/share/keyrings/redis-archive-keyring.gpg] https://packages.redis.io/deb $(lsb_release -cs) main" | sudo tee /etc/apt/sources.list.d/redis.list

sudo apt-get update

sudo apt-get install redis

- Then enable and start the service:

sudo systemctl enable redis

sudo systemctl start redis

2. Basic Configuration (to Avoid Chaos)

- Redis has a bad habit of storing everything in RAM, so if you don’t configure it properly, it could eat all your memory and crash your system. (A very unforgiving trait.)

- Edit /etc/redis/redis.conf and set some sanity limits:

maxmemory 256mb

maxmemory-policy allkeys-lru

Explanation:

- Limits Redis to 256MB so it doesn’t consume your entire system.

- Uses allkeys-lru policy, meaning it will automatically remove the least recently used keys once the memory limit is reached.

3. Connecting to Redis

- After installing, you can test it by running:

redis-cli ping

-

If it replies with PONG, congratulations—you've awakened another beast.

-

Set and retrieve a value:

SET spell "fireball"

GET spell

→ Should return "fireball" (instant, no SQL involved).

4. Securing Redis (Because It Trusts Too Much)

-

By default, Redis binds to all interfaces, meaning anyone could connect if they know the IP. That’s bad.

-

Limit Redis to localhost: Edit /etc/redis/redis.conf and change:

# bind 127.0.0.1 ::1

Keep this enabled by default

protected-mode yes

- Set a strong password: Set a password (optional, overkill for local but useful for staging/prod):

requirepass supersecurepassword

🔐 If you do this, don't forget to connect with:

Redis.new(password: ENV['REDIS_PASSWORD'])

- Restart Redis for changes to apply:

sudo systemctl restart redis

Confirm it's only bound to localhost:

sudo ss -tlnp | grep redis

-

SPACE + K= syntax documentation -

SPACE + e= open nvim-tree -

dd+p= cut and paste,yy+p= copy and paste -

SHIFT + V= Select a block of text.gc= Comment out / uncomment

Create a new repository from the command line

echo "# http_server" >> README.md

git init

git add README.md

git commit -m "first commit"

git branch -M main

git remote add origin git@github.com:theflyoccultist/http_server.git

git push -u origin main

Connect to a new repository

-

Open a Terminal

Navigate to the directory where you want to clone the repository.

cd /path/to/your/directory -

Clone the Repository

Run the following command:

git clone https://github.com/theflyoccultist/kepler-rss-feed.gitOr, if using SSH:

git clone git@github.com:theflyoccultist/kepler-rss-feed.git -

Navigate into the Repository

After cloning, navigate into the repository folder:

cd kepler-rss-feed

.gitignore a file that has already been pushed

echo debug.log >> .gitignore

git rm --cached debug.log

git commit -m "Start ignoring debug.log"

Change the URL of a repository:

git remote -v

Then, you can set it with:

git remote set-url origin <NEW_GIT_URL_HERE>

Remove a Git commit which has not yet been pushed

git reset HEAD^

Restore select files to their last committed state:

git restore path/to/file1 path/to/file2

Link to a repo that has been renamed:

git remote -v

git remote set-url origin (new repo name)

git remote -v

YAML Cheatsheet

What is YAML

- Data serializaion language (like XML and JSON)

- Standard format to transfer data

- Extensions : .yaml and .yml

- YAML is a superset of JSON: any valid JSON file is also a valid YAML file

- Data structures defined in line separation and indentation

YAML Use Cases

- Docker-compose, Ansible, Kubernetes and many more

Key value pairs

app: user-authentication

port: 9000

# A comment

version: 1.7

# A second comment

- For strings, you can use either double quotes, single quotes or no quotes at all. If you use \n, you have to use double quotes or YAML don't recognize it.

Objects

microservice:

app: user-authentication

port: 9000

version: 1.7

- The space has to be the exact same for each attribute between objects. You can use an online YAML validator because it is sensitive about those spaces.

Lists & Boolean

microservice:

- app: user-authentication

port: 9000

version: 1.7

deployed: false # yes and no, on and off works too

versions:

- 1.9

- 2.0

- 2.1 # You can use lists inside of list items, always align them.

- app: shopping-cart

port: 9002

versions: [2.4, 2.5, "hello"]

# You can use arrays instead, and have a mix of numbers and strings.

microservices:

- user-authentication

- shopping-cart

Boolean pitfalls:

yes: true # Interpreted as boolean true

no: false # Interpreted as boolean false

on: true # Also interpreted as true

off: false # Also interpreted as false

If you actually want "yes", "no", "on" and "off" as strings, quote them:

user-input: "yes" # String, not a boolean

Use !!str, !!int and !!bool for Explicit Types

Sometimes YAML thinks it knows what you mean. Force it to behave.

bad-example: 00123 # YAML assumes this is an octal number (!!!)

good-example: !!str "00123" # Now it's a string, not octal

Real Kubernetes YAML Configuration Example

- Key-value pairs

- metadata: object

- labels: object

- spec: object

- containers: list of objects

- ports: list

- volumeMounts: list of objects

apiVersion: v1

kind: Pod

metadata:

name: nginx

labels:

app: nginx

spec:

containers:

- name: nginx-container

image: nginx

ports:

- containerPort: 80

volumeMounts:

- name: nginx-vol

mountPath: /usr/nginx/html

- name: sidecar-container

image: curlimages/curl

command: ["/bin/sh"]

args: ["-c", "echo Hello from the sidecar container; sleep 300"]

Multi Line Strings

multilineString: |

this is a multiline String

and this is the next line.

next line

singlelineString: >

this is a single line String

that should be all on one line.

some other stuff

- Use | pipes if you want YAML to interpret this as multi line text. The line breaks will stay.

- Greater than sign > will be interpreted as a single line.

Real Kubernetes examples

apiVersion: v1

kind: ConfigMap

metadata:

name: mosquito-config-file

data:

mosquito.conf: |

log_dest stdout

log_type all

log_timestamp true

listener 9001

- You can put a whole shell script inside a YAML file.

command:

- sh

- -c

- |

http () {

local path="${1}"

set -- -XGET -s --fail

curl -k "$@" "http://localhost:5601${path}"

}

http "/app/kibana"

Environment Variables

- You can access them using a dollar sign inside your YAML configuration.

command:

- /bin/sh

- -ec

- >-

mysql -h 127.0.0.1 -u root -p$MYSQL_ROOT_PASSWORD -e 'SELECT 1'

Placeholders

- Instead of directly defining values, you can put placeholders with double brackets. It gets replaced using a template generator.

apiVersion: v1

kind: Service

metadata:

name: {{ .Values.service.name }}

spec:

selector:

app: {{ .Values.service.app }}

ports:

- protocol: TCP

port: {{ .Values.service.port }}

targetPort: {{ .Values.service.targetport }}

YAML Anchors & Aliases (DRY Principle)

YAML lets you reuse values using anchors (&) and aliases (*)

default-config: &default

app: user-authentication

port: 9000

version: 1.7

microservice:

- <<: *default # Reuses the default config

deployed: false

- app: shopping-cart

port: 9002

version: 2.4

Merge Keys (Combine Multiple Defaults)

Anchors can also be merged into objects:

common-config: &common

logging: true

retries: 3

extra-config:

<<: *common # Merges the common config

retries: 5 # Overrides specific values

Multiple YAML documents

- This is especially useful when you have multiple components for one service. Separate them with three dashes.

apiVersion: v1

kind: ConfigMap

metadata:

name: mosquito-config-file

data:

mosquito.conf: |

log_dest stdout

log_type all

log_timestamp true

listener 9001

---

apiVersion: v1

kind: Secret

metadata:

name: mosquito-secret-file

type: Opaque

data:

secret.file: |

cbdfdfg654fgdfg6f5sb132v1f6sg854g6s8g66IYUHGFKJHGVfd21=

- In Kubernetes, you can use both YAML or JSON, but YAML is cleaner and more readable.

YAML Linting Tools

A CLI tool: yamllint

kubectl apply --dry-run=client -f file.yaml

(Validates YAML syntax for Kubernetes)

Bash

Basically impossible to escape from if you are using Linux, you'd be using it everyday for all sorts of stuff.

Step-by-Step Bash Completion Check-Up 💅

Verify the package is installed:

dpkg -l | grep bash-completion

If nothing shows up:

sudo apt install bash-completion

Reload your .bashrc:

source ~/.bashrc

Test it: Try typing something like:

git ch<TAB><TAB>

You should see suggestions like checkout, cherry-pick, etc.

Or try:

ssh <TAB><TAB>

And see if it lists known hosts.

🔍 Basic Grep Guide

- Search for a word in all .md files

grep "keyword" *.md

- Search recursively through directories

grep -r "keyword" .

- Ignore case

grep -i "keyword" filename.md

- Show line numbers

grep -n "keyword" filename.md

- Combine: recursive, case-insensitive, line numbers

grep -rin "keyword" .

- Use regular expressions (careful—this is where it gets spicy)

grep -E "foo|bar" file.md

curl cheatsheet

Health check of a website

curl -sSf http://example.org > /dev/null

- If the request was successful, it will return... Nothing. If it's unsuccessful, it will try for a while and then return

Could not resolve host: <hostname>

How to test if your rate limiting works:

for i in {1..200}; do curl -s -o /dev/null -w "%{http_code}\n" http://localhost:4567; done

- 1..200: number of requests

- http://localhost:4567: the URL that needs to be tested

Sort of mini projects done in Bash, it is worth adding because despite the language's limitations, you can do quite a lot with it.

Bash Week — FizzBuzz but Cursed (PlingPlangPlong Edition)

This is an advanced FizzBuzz-style exercise, adapted for Bash with O(1) performance. No loops. No Python crutches. Just raw shell logic.

Description:

For a given input, if it's divisible by:

- 3 → output "Pling"

- 5 → output "Plang"

- 7 → output "Plong"

If none of the above, print the number itself.

Initial logic:

This simple program checks if the input number is equal to a modulo of either 3, 5 or 7. This operation however does not take the case where there's several true cases.

#!/usr/bin/env bash

if [ $(("$1" % 3)) -eq 0 ]; then

echo "Pling"

elif [ $(("$1" % 5)) -eq 0 ]; then

echo "Plang"

elif [ $(("$1" % 7)) -eq 0 ]; then

echo "Plong"

else

echo "$1"

fi

New Version:

#!/usr/bin/env bash

sound=""

(($1 % 3 == 0)) && sound+="Pling"

(($1 % 5 == 0)) && sound+="Plang"

(($1 % 7 == 0)) && sound+="Plong"

echo "${sound:-$1}

Notes:

- Uses string concatenation to combine results from multiple modulo checks.

- Uses Bash parameter expansion ${sound:-$1} to fallback to the number if sound is empty.

echo "${sound: -$1}"

-

It’s Bash's way of saying: “If sound is unset or null, use $1 instead.”

-

It’s lazy evaluation like Python’s x if x else y, but uglier and more prone to being misread after midnight.

-

C equivalent:

x ? x : y

Bash Week — Hamming Distance Spell (Char-by-Char Comparison, Final Form)

It calculates the Hamming distance (number of differing characters) between two equal-length strings.

Bash Spell:

#!/usr/bin/env bash

if [[ $# -ne 2 ]]; then

echo "Usage: $0 <string1> <string2>"

exit 1

elif [[ ${#1} -ne ${#2} ]]; then

echo "strands must be of equal length"

exit 1

else

count=0

for ((i = 0; i < ${#1}; i++)); do

a="${1:$i:1}"

b="${2:$i:1}"

if [[ "$a" != "$b" ]]; then

((count++))

fi

done

echo "$count"

fi

Notes:

- Input validation ensures exactly two args and equal string length.

- Uses Bash string slicing to compare characters by index.

- Avoids off-by-one or miscounting bugs from early exits.

- Ideal for scripting challenges, interviews, or shell-based logic tasks.

Bash Week - Bob's Invocation (with Regular Expressions)

This is basically a primitive version of an AI, with a different output depending on the text being inputted. It works as follows:

- The input ends with a question mark: answers: "Sure."

- The input is in uppercase: answers "Whoa, chill out!"

- The input is silence (either nothing or spaces): answers "Fine, be that way!"

- The input is both a question and in uppercase: answers "Calm down, I know what I'm doing!"

#!/usr/bin/env bash

input="$1"

trimmed_input="${input//[^a-zA-Z]/}"

trimmed_input2=$(tr -d ' \t\r' <<<"$input")

is_uppercase=false

is_question=false

is_silence=false

if [[ "$trimmed_input" =~ ^[[:upper:]]+$ ]]; then

is_uppercase=true

fi

if [[ "$trimmed_input2" == *\? ]]; then

is_question=true

fi

if [[ -z "$trimmed_input2" ]]; then

is_silence=true

fi

if [[ "$is_silence" == true ]]; then

echo "Fine. Be that way!"

elif [[ "$is_uppercase" == true && "$is_question" == true ]]; then

echo "Calm down, I know what I'm doing!"

elif [[ "$is_uppercase" == true ]]; then

echo "Whoa, chill out!"

elif [[ "$is_question" == true ]]; then

echo "Sure."

else

echo "Whatever."

fi

Bash Week - Scrabble Score Counter

Using cases, this will take a word as an input and calculate its value if played in Scrabble. Handles edge cases like any non alphabetic characters: in that case, no point is counted.

For example, the word "cabbage" is worth 14 points:

3 points for C

1 point for A

3 points for B

3 points for B

1 point for A

2 points for G

1 point for E

#!/usr/bin/env bash

i=${1,,}

if [[ ! "$i" =~ [a-z] ]]; then

echo 0

exit 0

fi

total=0

for ((j = 0; j < ${#i}; j++)); do

char="${i:j:1}"

case "$char" in

[aeioulnrst]) ((total += 1)) ;;

[dg]) ((total += 2)) ;;

[bcmp]) ((total += 3)) ;;

[fhvwy]) ((total += 4)) ;;

[k]) ((total += 5)) ;;

[jx]) ((total += 8)) ;;

[qz]) ((total += 10)) ;;

*) ((total += 0)) ;;

esac

done

echo "$total"

Bash Week - Armstrong Numbers

An Armstrong number is a number that is the sum of its own digits each raised to the power of the number of digits.

For example:

9 is an Armstrong number, because 9 = 9^1 = 9

10 is not an Armstrong number, because 10 != 1^2 + 0^2 = 1

153 is an Armstrong number, because: 153 = 1^3 + 5^3 + 3^3 = 1 + 125 + 27 = 153

154 is not an Armstrong number, because: 154 != 1^3 + 5^3 + 4^3 = 1 + 125 + 64 = 190

There are no ternary operators in Bash like there can be in C. In the code below, there is an alternate way to write them, while respecting bash's syntax.

#!/usr/bin/bash

result=0

for ((i = 0; i < ${#1}; i++)); do

power=$((${1:i:1} ** ${#1}))

result=$((result + power))

done

[ "$1" == "$result" ] && echo true || echo false

C

Very old, very fast and minimal, basically never goes out of style. If you had to choose only one systems language, go for this one. It bites back though.

Good To Know

Usage of Makefiles

Why use Makefiles in C and C++?

- To avoid doing everything repeatedly and manually.

Have a look at this multi-file project:

functions.h

const char* get_message() {

return "Hello World\n";

}

hello.c

#include <stdio.h>

#include "functions.h"

void hello() {

printf("%s\n", get_message());

}

main.c

int main() {

hello();

return 0;

}

Without Makefiles, you would have to compile those manually:

gcc -Wno-implicit-function-declaration -c main.c

gcc -Wno-implicit-function-declaration -c hello.c

gcc -Wno-implicit-function-declaration -c main.o hello.o -o final

chmod +x final

Whenever you find one mistake and fix it in the source code, you would have to run those commands again! Very annoying. But with Makefiles, you can automate all this. Here is how we would do:

nvim Makefile

CFLAGS = -Wno-implicit-function-declaration

all: final

final: main.o hello.o

@echo "Linking and producing the final application"

gcc $(CFLAGS) main.o hello.o -o final

@chmod +x final

main.o: main.c

@echo "Compiling the main file"

gcc $(CFLAGS) -c main.c

hello.o: hello.c

@echo "Compiling the hello file"

gcc $(CFLAGS) -c hello.c

clean:

@echo "Removing everything but the source files"

@rm main.o hello.o final

And now you can simply do:

make all

./final

make clean

And see all the commands being executed!

$(...)is used so you don't have to copy paste keywords, and can reference them with something shorter.- The

@command is to not display a command to the console. It is just a matter of taste.

Usage of GNU Debugger (GDB)

A debugger is a program that simulates/runs another program and allows you to:

- Pause and continue its execution

- Set "break points" or conditions where the execution pauses so you can look at its state

- View and "watch" variable values

- Step through the program line-by-line (or instruction by instruction)

Getting Started

Compile for debugging:

gcc -Wall -g -O0 program.c -o a.out

- Preserves identifiers and symbols

- Start GDB:

gdb a.out

- Optionally start with command line arguments:

gdb --args a.out arg1 arg2

- Can also be set in GDB

Useful GDB Commands

- Refresh the display:

refresh - Run your program:

run - See your code:

layout next - Set a break point:

break POINT, can be a line number, function name, etc. - Step:

next(nfor short) - Continue (to next break point):

continue - Print a variable's value:

print VARIABLE - Print an array:

print *arr@len - Watch a variable for changes:

watch VARIABLE - Set an argument to a function:

set args number - Use during a Segfault:

backtrace full

Usage on a buggy program (try it!)

#include <stdlib.h>

#include <stdio.h>

#include <math.h>

int sum(int *arr, int n);

int* getPrimes(int n);

int isPrime(int x);

int main(int argc, char **argv) {

int n = 10; //default to the first 10 primes

if(argc = 2) {

atoi(argv[2]);

}

int *primes = getPrimes(n);

int s = sum(primes, n);

printf("The sum of the first %d primes is %d\n", n, s);

return 0;

}

int sum(int *arr, int n) {

int i;

int total;

for(i=0; i<n; i++) {

total =+ arr[i];

}

return total;

}

int* getPrimes(int n) {

int result[n];

int i = 0;

int x = 2;

while(i < n) {

if(isPrime(x)) {

result[i] = x;

i++;

x += 2;

}

}

return result;

}

int isPrime(int x) {

if(x % 2 == 0) {

return 0;

}

for(int i=3; i<=sqrt(x); i+=2) {

if(x % i == 0) {

return 0;

}

}

return 1;

}

Usage on an infinite loop

If you run this program, it will get stuck in an infinite loop. Let's find out what is causing it.

gcc -g -lm -O0 -std=c99 -w primes.c

gdb a.out

layout next

run

And if you do Ctrl + C, you will see on which infinite loop it is getting stuck at.

Do next / n to navigate through the code. Display the variables by doing print x.

If you see that it is getting stuck at a function, type step to access it. There, you can continue inspecting with next.

Finally, do quit when you have figured out what needs to be reworked in your code. Then you can repeat the process.

Usage on incorrect values

Use *primes@10 for example to print the dereferenced values in an array. If you forget the * at the beginning, it will print memory addresses.

Use clear main and break sum to change between breakpoints.

Use watch total so you don't have to do print total at each loop iteration, it will do it automatically.

In GDB, you can use set args 20 to intentionally set it to a value that you know will yield wrong results.

Usage on a Segfault

backtrace full will tell you exactly what functions has been called, and prints out everything in one command.

Don't forget to test the tests that you knew passed before, after making changes. Make sure the changes don't break the passing test cases.

Usage of Valgrind

- Memory bugs are hard to find, until strings some variables changes values randomly.

- Valgrind is a suite of profiling tools that allows you to check your code in a number of ways.

- Default one: the memcheck tool, it's also perhaps the most powerful one. It runs your code inside of a virtual machine. It then instruments all of the memory accesses that you are performing, and it double checks to make sure your pointer accesses are valid.

Installation example:

sudo apt-get install valgrind

- Make sure to add the

-gflag to the compiler, which sets up debugging information. - Also turn on

-Wall -Werrorso your compiler can tell you what is wrong as much as possible.

First step is valgrind ./program to start off.

The numbers between == at the beginning of each line is the Process ID Valgrind is currently working on.

Some flags to use:

--leak-check=full: Prints detailed info for each detected memory leak, where the memory was allocated, how big it is, and whether it's reachable or not.

--leak-check=summary: Only prints the final summary. Useful for quick checks.

--track-origins=yes: Traces the origin of uninitialized memory so you can see where in your code you forgot to initialize it.

--show-leak-kinds=all: By default, Valgrind only shows certain leak categories. With all, you'll see:

- Definitely lost: memory you 100% forgot to free.

- Indirectly lost: memory was only reachable through a block that's definitely lost.

- Possibly lost: Valgrind can't be sure, but the pointer situation looks sketchy (e.g., pointer arithmetic changed the address).

- Still reachable: memory is still pointed to at exit, so it isn't technically leaked. Often from static/global allocations or intentional caches.

--num-callers=<n>: Shows <n> stack frames for each error. Default: 12. More stack depth for messy call chains.

--error-limit=no: Disables the default limit on errors shown.

--quiet: Minimal output, useful in scripts or CI logs.

--gen-suppressions=yes: Generates suppression entries for false positives. For library code you can't change.

--log-file=<file>: Saves all output into a file.

Usage of GCC/Clang Sanitizer Flags

-fsanitize=address: ASan (AddressSanitizer). Out-of-bounds reads/writes, use-after-free, stack buffer overflows, heap corruption.

-fsanitize=undefined: UBSan (UndefinedBehaviorSanitizer). For undefined behavior: integer overflow, invalid shifts, null deref in some cases, type punning errors. Often used alongside ASan.

-fsanitize=leak: LSan (LeakSanitizer). For memory leaks at program exit.

-fsanitize=thread: TSan (ThreadSanitizer). For data races, thread-related undefined behavior. Slower, but very useful in multi-threaded C/C++.

-fsanitize=memory: MSan (MemorySanitizer). For use of unitialized memory. Slow, needs special runtime libraries, but catches stuff ASan misses.

-fsanitize=safe-stack: SafeStack. Splits stack into safe/unsafe parts to prevent some exploits. More for security hardening than bug hunting.

Combining Sanitizers:

- Address + Undefined:

-fsanitize=address,undefined: good default debug build. - Leak only:

-fsanitize=leak(or just let ASan handle it). - Thread:

-fsanitize=thread. Run it alone, it doesn’t mix well with ASan. - Always add

-g -O1or-g -O0when debugging so sanitizer output has usable stack traces.

Use both Valgrind and ASan for layered defense

- Asan is fast enough for day-to-day dev builds. Catches most runtime memory errors before you even think about Valgrind.

- Valgrind is the slow, final boss fight before release. Great at catching leaks and weirdness that slipped past ASan, especially in libraries you didn't compile yourself.

enum.c

This file, enum.c, is a simple C program that demonstrates the use of an enumeration type. Here's a breakdown of the code:

-

Header Inclusion:

#include <stdio.h>The

stdio.hlibrary is included for input and output functions, specifically for usingprintf. -

Enumeration Declaration:

enum month{jan, feb, mar, apr, may, jun, jul, aug, sep, oct, nov, dec};An enumeration type

monthis defined, representing the months of the year. The values in the enumeration are implicitly assigned integer values starting from 0 (jan= 0,feb= 1, ...,dec= 11). -

Function Definition:

enum month get_month(enum month m) { return(m); }The function

get_monthtakes an argument of typeenum monthand simply returns the same value. It's a minimal example to show how an enumeration can be passed to and returned from a function. -

Main Function:

int main() { printf("%u\n", get_month(apr)); return 0; }The

mainfunction:- Calls

get_monthwith theaprenumeration value (which corresponds to 3, assuming 0-based indexing), - Prints the returned value as an unsigned integer (

%uformat specifier). - Returns 0 to indicate successful execution.

- Calls

Output:

When this program is run, it will output:

3

This corresponds to the integer value of the apr enumeration.

Purpose:

This program is essentially a learning exercise to demonstrate the basics of declaring and using enumerations in C. It introduces how to:

- Create an enumeration,

- Pass an enumerated value to a function,

- Return an enumerated value from a function, and

- Print the integer representation of an enumerated value.

weekday.c

Purpose

This C program demonstrates the use of enum, switch, and case constructs in C by working with days of the week. It includes functions to get the next and previous day and prints the corresponding day names.

Code Explanation

-

Enum Definition:

enum day {sun, mon, tue, wed, thu, fri, sat};

Defines an enumerated typedayto represent days of the week.

-

print_day Function:

- Takes an enum

dayvalue as input and prints the corresponding day name using aswitchstatement. If the input is invalid, it prints an error message.

- Takes an enum

-

next_day Function:

- Takes an enum

dayvalue as input and computes the next day based on modulo arithmetic.

- Takes an enum

-

previous_day Function:

- Takes an enum

dayvalue as input and computes the previous day based on modulo arithmetic.

- Takes an enum

-

main Function:

- Demonstrates how to use the enumerated type and the functions:

- Initializes today as

fri. - Prints the current day.

- Prints an invalid day (to demonstrate error handling).

- Prints the next and previous days.

- Initializes today as

- Demonstrates how to use the enumerated type and the functions:

Example Usage

Here’s how the program would behave:

enum day today = fri;

print_day(today); // Outputs: friday

print_day(7); // Outputs: 7 is an error

print_day(next_day(today)); // Outputs: saturday

print_day(previous_day(today)); // Expected Output: thursday

Output Example

When you compile and run the program:

friday

7 is an error

saturday

thursday

Complete Code

#include <stdio.h>

enum day {sun, mon, tue, wed, thu, fri, sat};

void print_day (enum day d) {

switch(d) {

case sun: printf("sunday"); break;

case mon: printf("monday"); break;

case tue: printf("tuesday"); break;

case wed: printf("wednesday"); break;

case thu: printf("thursday"); break;

case fri: printf("friday"); break;

case sat: printf("saturday"); break;

default: printf("%d is an error", d);

}

}

enum day next_day (enum day d) {

return (d + 1) % 7;

}

enum day previous_day (enum day d) {

return (d + 6) % 7;

}

int main() {

enum day today = fri;

print_day(today);

printf("\n");

print_day(7);

printf("\n");

print_day(next_day(today));

printf("\n");

print_day(previous_day(today));

return 0;

}

Key Learning Points